OCR - What does it mean?

OCR explained

OCR – short for Optical Character Recognition – is a text recognition technology. Using OCR, it is possible to convert analog text into machine-readable characters. This can be useful, for example, if you have photographed or scanned a document and want to process the content further.

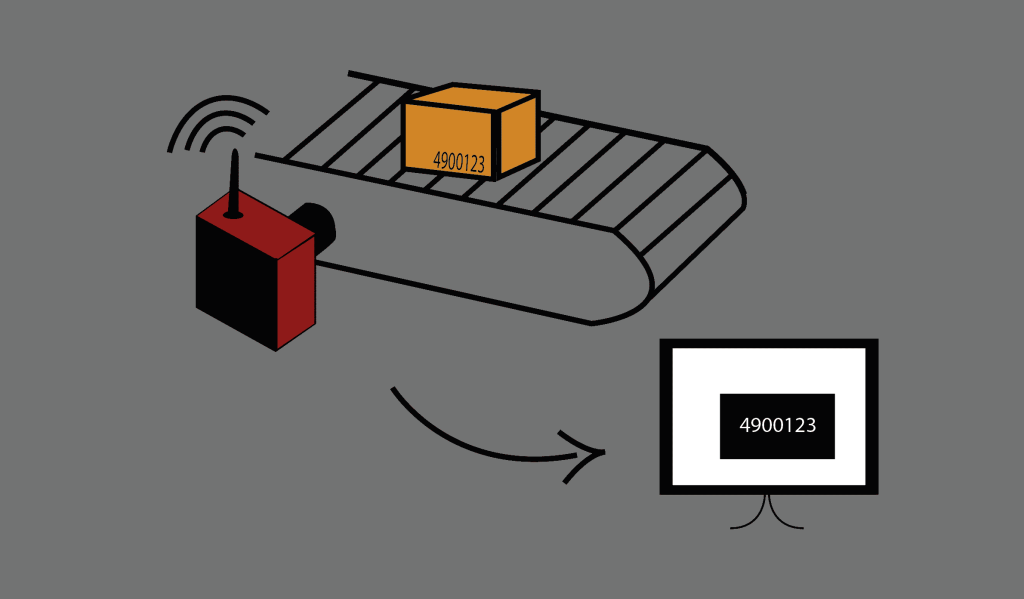

There are also many cases in industry where OCR is used. For example, if there is an identification number on a component, this can be read and assigned using OCR. Smart cameras are often used for such cases, which take a photo of the component with the printed number and evaluate it directly.

But how does OCR work?

Many different image processing steps take place in order to reach the target – i.e. to read out the characters. After the image is captured, it is binarized. In a first step, a threshold value is calculated using an adaptive threshold value method and each gray value is assigned its own threshold value. Each pixel is then assigned either the maximum value of 255 or the minimum value of 0, depending on a calculated threshold value. This produces a binary black and white image, which is not yet sufficient for OCR.

This is because in order to eliminate further noise, an analysis of the correlation components is carried out after the binarization of the mapping with subsequent removal of components below a manually defined threshold value. The aim of the analysis is to assign a label to each binary value of the foreground. The four directly adjacent binary values as well as the four diagonally adjacent binary values, also known as 8-neighborhoods, of each pixel of the foreground are considered. If there is a neighboring pixel that already has a label, this is adopted. Otherwise, a new label is created and assigned to the binary value.

To ensure that the image can be further optimized for OCR, morphological inference is performed in the final step. The binary image is first dilated, followed by erosion. Both processes are based on complicated mathematical formulas that aim to assign a unique value to all pixels in the image. The example shows the effect on text recognition:

Analogous to dilation, a translation also takes place across all points of the binary image. Contrary to this, when the result point set is eroded, only points in the coordinates of the displacement are added as soon as the intersection of the foreground completely contains the structural element. The following example shows the result of erosion using different radii 𝑟 of the structural element

If both methods are applied successively with the same structural element, a morphological opening or closing is obtained. It should be noted that the cavities of the digits are always closed, whereby a radius that is too high leads to undesirable merging of several digits.

How does text recognition finally work?

To ensure that the individual digits can ultimately be identified, various methods are used depending on the application. On the one hand, the OCR software Tesseract can be used. This was originally developed by Ray Smith at HP Inc. and is now part of Google LLC. Alternatively, the artificial neural network developed by Yann LeCun, better known as the Convolutional Neural Network (CNN), LeNet, is available.

When using Tesseract, the first step is to analyze the correlation components. The contour of the individual components is saved and combined into groups. A polygon approximation then takes place, which searches for straight areas of the contour of a character and thus creates an approximation of the character in the form of a polygon. To minimize the training effort, the polygons are then normalized to uniform dimensions. In the next step, feature vectors are created on the basis of the approximation, which contain the coordinates of a point on the polygon and its inclination. More points mean greater accuracy. Classification then takes place using a k-nearest neighbor algorithm. The approximated polygon is thus compared with the feature vectors of the training data, i.e. characters known to the system, and classified according to the character with the maximum match.

The algorithms now work so well that in most cases there is 99% certainty that the characters will be read correctly.

Sources:

Ohser, J. (2018). Applied Machine Vision and Image Analysis. Munich: Carl Hanser Verlag.

Smith, R. (2007). An Overview of the Tesseract OCR Engine. Ninth International Conference on Document Analysis and Recognition (ICDAR 2007), pp. 629-633. doi:10.1109/ICDAR.2007.4376991

Werner, M. (2021). Digital Machine Vision. Fulda: Springer Vieweg.

Other contributions:

Release Update V5.4.0

The release update V5.4.0 is here! A major dashboard update, minor bug fixes and the integration of persistent variables are included.

Finally: The new VIU2 PoE camera is here!

The VIU2 PoE camera is here! You can find out why PoE is an important feature in this article.

Finally: The new VIU2 PoE camera is here!

The VIU2 PoE camera is here! You can find out why PoE is an important feature in this article.